Understanding Hidden Computations in Chain-of-Thought Reasoning

Recent research has revealed that transformer models can maintain their reasoning capabilities even when Chain-of-Thought (COT) prompting steps are replaced with filler characters. This post presents my investigation into the mechanisms behind these hidden computations, using the 3SUM task as an experimental framework.

Background:

Chain-of-Thought prompting has emerged as an effective method for enabling language models to perform complex reasoning tasks. The technique typically involves generating intermediate computational steps before producing a final answer. However, studies have shown that replacing these intermediate steps with filler tokens (e.g., “…”) does not significantly impact model performance.

To investigate this phenomenon, we focused on the 3SUM task - identifying three numbers in a set that sum to zero. While conceptually straightforward, this task serves as an effective proxy for studying more complex reasoning processes.

Experimental Setup:

My analysis used a 34M parameter LLaMA model with the following specifications:

- 4 layers

- 384 hidden dimension

- 6 attention heads

The model was trained on hidden COT sequences for the 3SUM task, allowing us to analyze how it processes and utilizes filler tokens during reasoning.

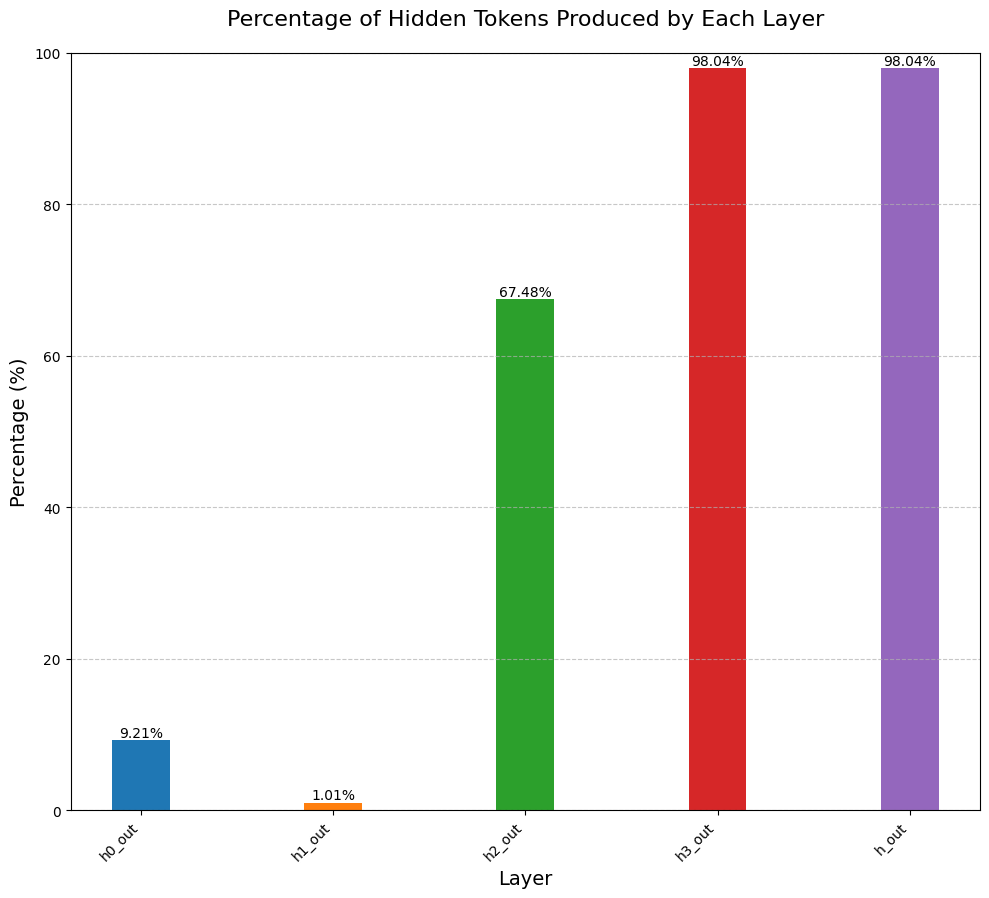

Layer-wise Analysis:

My analysis revealed distinct patterns in how representations evolve across the model’s layers:

- Initial layers primarily process raw numerical sequences

- Filler tokens emerge prominently from the third layer

- Final layers show significant reliance on filler token representations

This progression suggests a systematic transformation of computational representations across the model’s architecture.

Token Ranking Analysis:

Examination of token probabilities revealed consistent patterns:

- Filler characters consistently emerged as the highest-ranked tokens

- Original, non-filler COT sequences remained present in lower-ranked positions

This finding supports the hypothesis that the model maintains original computational paths while using filler tokens as an overlay.

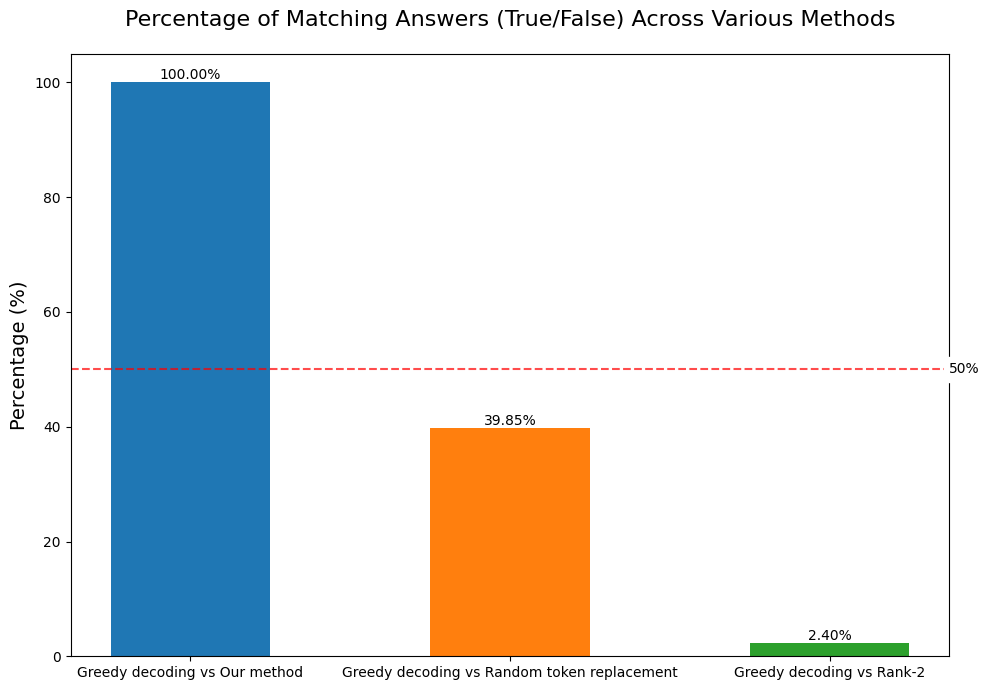

Modified Greedy Decoding:

We developed an enhanced decoding algorithm that:

- Implements standard greedy decoding

- Substitutes the second-highest probability token when encountering fillers

- Maintains this substitution throughout the sequence

This approach achieved 100% consistency with non-filler COT results on the 3SUM task, demonstrating that the underlying computation remains intact despite the presence of filler tokens.

Future Work:

- Development of more sophisticated decoding methods

- Extension to natural language reasoning tasks

- Enhancement of token hiding techniques beyond single filler tokens

- Identification of specific computational circuits involved in token hiding

Conclusion:

This investigation provides concrete evidence for how transformer models encode and process information in hidden COT sequences. The results demonstrate that computational paths remain intact even when obscured by filler tokens, suggesting new approaches for understanding and interpreting chain-of-thought reasoning in language models.

The code used for the experiments and analysis is available on GitHub: https://github.com/rokosbasilisk/filler_tokens/tree/v2

Appendix: Layerwise View of Sequences Generated via Various Decoding Methods

To provide a more detailed look at the results, we’ve included visualizations of the sequences generated by different decoding methods across the model’s layers.

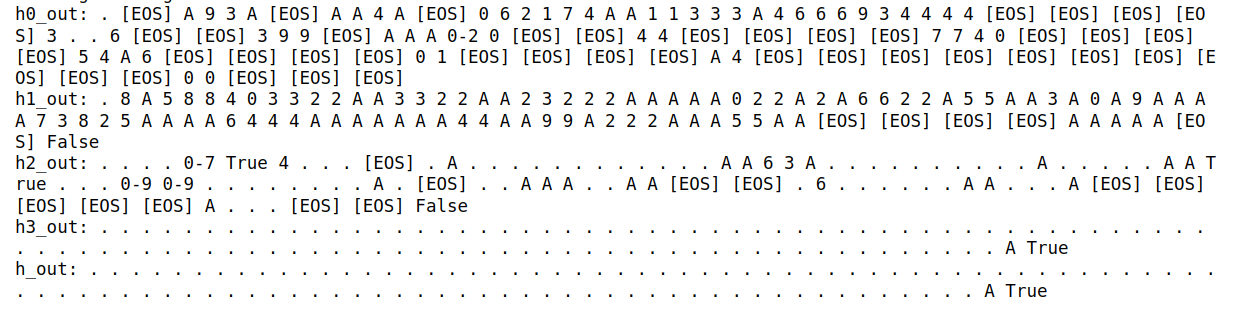

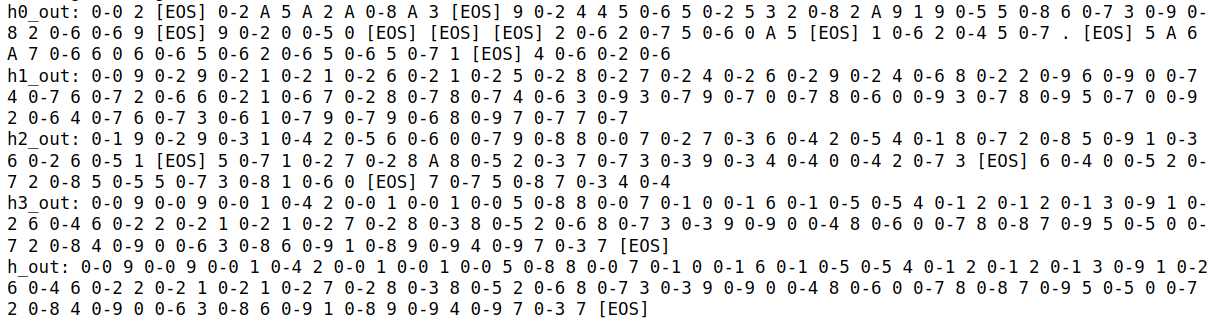

1. Greedy Decoding:

This plot shows the sequences generated by standard greedy decoding across different layers of the model.

2. Greedy Decoding with Rank-2 Tokens:

Here, we visualize the sequences generated when we replace the top-ranked token (usually the hidden character) with the second-highest probability token.

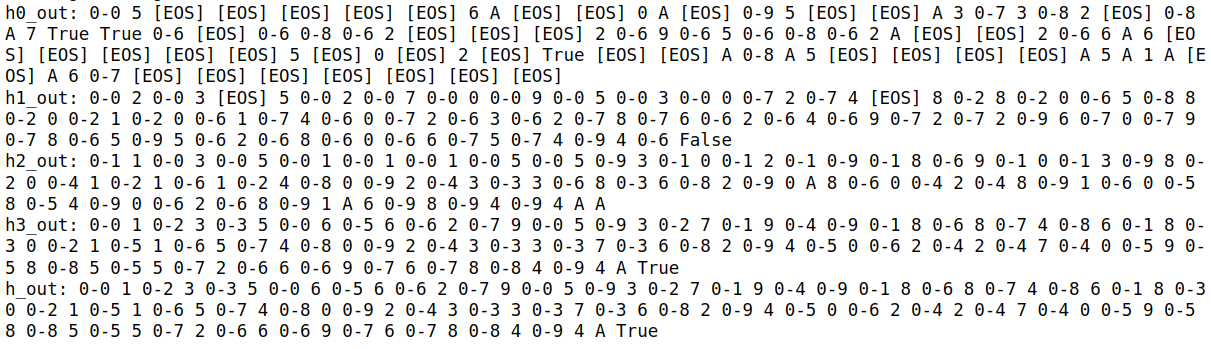

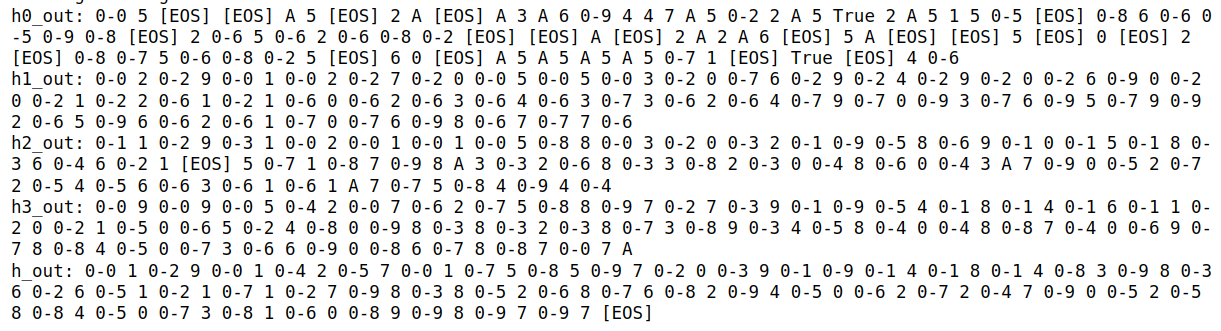

3. Greedy Decoding with Hidden Tokens Replaced by Rank-2 Tokens (My Method):

This plot demonstrates the proposed method, where we perform greedy decoding but replace filler tokens with the second-highest probability token.

4. Greedy Decoding with Hidden Tokens Replaced by Randomly Selected Tokens:

For comparison, this plot shows what happens when we replace filler tokens with randomly selected tokens instead of using the rank-2 tokens.

References:

-

Pfau, J., Merrill, W., & Bowman, S. R. (2023). Let’s Think Dot by Dot: Hidden Computation in Transformer Language Models. arXiv:2404.15758.

-

Wei, J., Wang, X., Schuurmans, D., et al. (2022). Chain-of-thought prompting elicits reasoning in large language models. arXiv:2201.11903.

-

nostalgebraist (2020). interpreting GPT: the logit lens LessWrong post.

-

Touvron, H., Lavril, T., Izacard, G., et al. (2023). LLaMA: Open and Efficient Foundation Language Models. arXiv:2302.13971.